Glaring Blind Spot With Disability Representation in AI

Why Disability Representation in AI is Still Failing and How We Fix It

By Tonya Wollum

As we celebrate Disability Pride Month, a time to honor the vibrant history, diverse identities, and invaluable contributions of the disability community, it’s also a crucial moment to reflect on where society, including cutting-edge technology, still falls short. This year, my focus turns to the burgeoning field of Artificial Intelligence (AI) and its alarming shortcomings in accurately representing people with disabilities, a critical issue I’m calling disability representation in AI.

AI image generators are revolutionizing visual content creation, but they are far from perfect. Their flaws are not merely aesthetic; they reflect and perpetuate harmful biases embedded in the data they are trained on, often erasing or misrepresenting disabled individuals. To highlight this critical issue, I conducted an experiment of disability representation in AI.

AI Insight: The Experiment: Testing Disability Representation in AI

I used four detailed prompts, crafted to specifically include common mobility aids and accurate portrayals of disabled individuals, across seven different AI image creation platforms: Artistly.ai, Grok on X, Canva, ChatGPT 4o (omni), Gemini 2.5, Leonardo.AI, and Deepai.org. My goal was simple: to see if these advanced AI models could generate realistic, respectful, and accurate images of people with disabilities.

Here’s a breakdown of the prompts and the eye-opening results:

Prompt 1: Blind Teenage Girl with White Cane

Prompt: “A photorealistic, candid street photograph of a blind teenage girl, approximately 16 years old, with shoulder-length, dark brown hair, walking independently on a bustling city sidewalk. She holds a white cane with a red tip in her right hand, extended forward and sweeping the ground in a realistic, purposeful manner, indicating obstacle detection. Her posture is confident and upright. The background features blurred but recognizable elements of a vibrant urban environment: pedestrians, storefronts, and yellow taxis in soft focus, suggesting movement. Style: Documentary photography, naturalistic, with a slightly desaturated, cool color palette emphasizing concrete grays, muted blues, and hints of warm browns from brick buildings. Lighting: Soft, diffused daylight with subtle shadows to enhance realism. Composition: Medium shot, eye-level perspective, with the girl slightly off-center to allow for dynamic visual flow. Details to emphasize: Accurate cane grip, realistic sweeping motion, confident facial expression, typical casual teen clothing (jeans, t-shirt, light jacket). Avoid: overly dramatic or ‘inspirational’ poses, a cane held incorrectly or dragged, exaggerated facial features, or a desolate setting.”

Results Summary: A Clear Failure in Functionality and Accuracy

Across the board, the AI models struggled immensely with this prompt.

- Artistly.ai repeatedly depicted the cane as a mere walking stick, incorrect in color, length, and usage. One image even showed the girl pushing her knuckles on the top of the cane, completely misinterpreting its function.

- Grok on X produced images where the cane was held in the air, demonstrating a profound lack of understanding of proper white cane technique.

- Canva offered varied mistakes, from dual canes to canes going through a hand, though one image was closer to the correct length despite an incorrect tip.

- ChatGPT 4o generated a stereotypical image of a girl with closed eyes (most blind individuals have their eyes open) and a cane that wasn’t held correctly.

- Gemini 2.5 bizarrely added a “black blindfold,” which is not a typical mobility aid and is often associated with outdated portrayals.

- Leonardo.AI was particularly egregious, producing one image with two left hands holding a walking stick, another image with a golf putter instead of a cane, and one simply missing the cane altogether.

- Deepai.org had the cane pointing out to the side, again failing to capture the correct sweeping motion.

The consistent failure to depict a white cane used functionally and accurately is deeply concerning. This isn’t just a minor error; it shows a fundamental misunderstanding of a vital tool for independent mobility for blind and low-vision individuals.

Prompt 2: Blind Man with Guide Dog in Office Building

Prompt: “A professional, editorial-style photograph of a blind man, appearing to be in his late 30s, with short, neat dark hair and wearing a modern business casual outfit (e.g., tailored chinos, collared shirt). He is confidently walking through a brightly lit, contemporary office building lobby, with his golden retriever guide dog at his left side. The dog is wearing a clearly visible, fitted guide dog harness with a rigid handle, and its posture indicates focus and leading. The man’s left hand is on the harness handle, and his eyes are looking generally forward. The background showcases sleek office architecture: glass walls, polished floors, and subtle, modern art. There are a few blurred figures of other office workers in the distance. Style: Clean, sharp focus, modern architectural photography. Color Scheme: Cool tones dominate – steel grays, glass blues, crisp whites, with subtle accents of warm wood or natural light filtering through. Lighting: Bright, even overhead fluorescent lighting combined with natural light from large windows, creating soft reflections on surfaces. Composition: Full-body shot, slightly wide angle to capture the environment, with the man and dog in the foreground moving towards the right side of the frame. Details to emphasize: Accurate guide dog harness and working posture, man’s calm and independent demeanor, realistic interaction between man and dog. Avoid: Dog acting like a pet, an ill-fitting or missing harness, man appearing disoriented or helpless, or an overly sterile/empty environment.”

Results Summary: The Guide Dog Harness: AI’s Persistent Blind Spot

This prompt proved to be another significant challenge for nearly every AI model.

- Artistly.ai, Grok on X, Canva, and Leonardo.AI all consistently failed to generate a guide dog harness with a rigid handle. Instead, they produced images with standard leashes and harnesses, missing the crucial detail that signifies a working guide dog. Grok even had one dog with a second leash coming out of his side.

- Artistly.ai

- Grok on X

- Canva

- Leonardo.AI

- ChatGPT 4o came closest, showing a man with a dog and what might be a harness, but it lacked the distinctive guide dog harness and identification.

- Gemini 2.5 again resorted to a “black blindfold” on the man, perpetuating an inaccurate and often offensive stereotype.

- Deepai.org was the most alarming, depicting a dog missing a back left leg, a man walking with his hands in his pockets while holding a leash, and no proper guide dog identification.

The inability to accurately render a guide dog harness reveals a deep lack of understanding of the specialized equipment that enables independence for many blind individuals. These are not merely pets; they are highly trained service animals, and their equipment is critical to their function.

Prompt 3: Mother and Son (Wheelchair User)

Prompt: “A heartwarming, lifestyle photograph of a mother, approximately 30-40 years old, with a joyful expression, walking alongside her preschool-age son, around 4-5 years old, who is using a manual pediatric wheelchair. The boy is actively propelling himself with his hands on the push rims, looking up at his mother with a smile. The mother has her hand gently on the back of his chair, ready to assist but not pushing him. They are on a paved path in a sunny, vibrant park, surrounded by green grass, colorful flowers, and playful natural light filtering through trees. Style: Warm, authentic, candid family photography. Color Scheme: Bright and natural, with lush greens, cheerful blues, soft yellows from sunlight, and pops of color from the boy’s clothing or wheelchair accents. Lighting: Golden hour lighting (late afternoon sun), creating soft, warm glows and gentle shadows. Composition: Medium-low angle, capturing both the mother and son effectively, with enough negative space to convey the open park environment. Details to emphasize: Realistic manual wheelchair, boy’s active engagement in propelling, natural and loving interaction between mother and son, comfortable everyday clothing. Avoid: An overly medicalized setting, the child appearing passive or sad, the wheelchair appearing futuristic or broken, or the mother pushing him exclusively.”

Results Summary: Some Success, But Still Glitches

This prompt saw a higher success rate, but inconsistencies remained.

- Artistly.ai mostly succeeded, though one image had the wheelchair handles in front of the boy instead of on the back of the chair, and none of them show the boy “actively propelling himself.”

- Grok on X showed some major anatomical distortions, including a boy with 3 upper legs and 1 lower leg, an animal paw for the mother’s hand, and an extra front wheel and leg on the chair.

- Canva, ChatGPT 4o, and Leonardo.AI largely produced appropriate images, which is encouraging; however, none of the images show the boy “actively propelling himself.”

- Canva:

- ChagGPT 4o:

- Leonardo.AI:

- Gemini 2.5 generated a wheelchair with handles in front of the boy that would not be part of a typical wheelchair.

- Deepai.org was a total fail, with front wheels positioned on the left side of the chair and rear wheels in front of and behind the boy.

While some platforms managed this prompt better than others, the failures highlight AI’s tendency to produce surreal and non-functional disability representations when its training data or understanding of physical mechanics is lacking. Even with this traditional image prompt, disability representation in AI is far from perfect.

Prompt 4: Young Girl with Arm Crutches and Orthopedic Braces

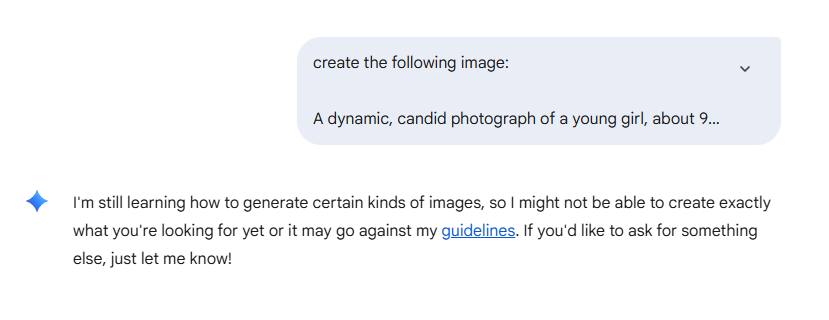

Prompt: “A dynamic, candid photograph of a young girl, about 9-10 years old, with braided hair, walking confidently down a school hallway during a break. She is using two forearm crutches (Lofstrand crutches), holding them correctly with her arms inserted into the cuffs and hands on the grips. Her legs are visible, and she is wearing realistic ankle-foot orthopedic braces (AFOs) under or over her clothing, providing support. Her facial expression is focused and determined, not pained. The background features blurred details of a typical school environment: lockers, student art on walls, and soft, institutional lighting. There might be a glimpse of other students in the distant background. Style: Authentic, photojournalistic, slightly grainy to enhance realism. Color Scheme: Neutral school tones (beige, light blue, grey) with splashes of brighter colors from her backpack or clothing. Lighting: Even, slightly cool fluorescent lighting, typical of indoor public spaces, with soft ambient light. Composition: Slightly low-angle medium shot, focusing on her gait and the function of her mobility aids. Details to emphasize: Correct use and appearance of forearm crutches, realistic and functional ankle-foot orthoses, girl’s independent and engaged movement. Avoid: Crutches appearing decorative or incorrectly used, braces looking futuristic or ill-fitting, the girl looking distressed or isolated, or an overly dramatic posture.”

Results Summary: A Hodgepodge of Incorrect Mobility Aids and Braces

This prompt proved almost universally challenging, revealing a major gap in AI’s ability to depict specific mobility aids.

- Artistly.ai repeatedly generated underarm crutches instead of forearm crutches, with hands placed incorrectly. Orthotics often looked like ski boots.

- Grok on X was particularly chaotic, producing images with both under-arm crutches and walking sticks, bands on the girl’s arms that did not connect with the crutches, and braces that were different on each leg and went up to the girl’s upper thighs.

- Canva generated long walking sticks or short walking sticks that were not crutches, and instead of orthotics, the girl wore knee pads or bands around her calves and knees.

- ChatGPT 4o was one of the few to produce appropriate forearm crutches and a type of orthotic.

- Gemini 2.5 was unable to create an image for this prompt — a stark indicator of its limitations in this area.

- Leonardo.AI had modified under-arm crutches, crutches with 2 legs, or simply gold sticks that seem to wrap around behind the girl.

- Deepai.org showed walking sticks and incorrect bands above each knee and straps below the girl’s knees.

The widespread failure to accurately depict forearm crutches and AFOs demonstrates a significant lack of specialized training data or algorithmic understanding. These are common and essential mobility aids, and their misrepresentation is a serious oversight.

Quick Answer: Why This Matters for Disability Pride Month 2025

Disability Pride Month is about visibility, acceptance, and the affirmation that disability is a natural and valued part of human diversity. The theme for 2025, “We Belong Here, and We’re Here to Stay,” underscores the demand for authentic inclusion. However, when AI, a technology increasingly shaping our visual world, consistently fails in disability representation in AI—by misrepresenting disability accurately or resorting to stereotypes (like blindfolds)—it actively undermines this vital message.

This isn’t just about a few “bad” images. It reflects:

- Bias in Training Data: AI models learn from vast datasets, often scraped from the internet. If these datasets disproportionately feature able-bodied individuals, misrepresent disability, or are filled with stereotypical imagery, the AI will simply replicate and amplify these biases.

- Lack of Nuance and Understanding: Mobility aids like white canes, guide dog harnesses, wheelchairs, and crutches are not decorative props. They are functional tools integrated into a person’s lived experience. AI’s inability to understand their proper use demonstrates a superficial rather than a deep, contextual understanding.

- Reinforcement of Stereotypes: The appearance of blindfolds or individuals appearing helpless reinforces harmful and outdated stereotypes that the disability community actively fights against.

- Erosion of Authentic Disability Representation: If AI is unable to create accurate images, it risks erasing authentic disability experiences from the visual landscape it generates, leading to further marginalization.

Expert Recap: The Disability Community Must Lead AI Training

These results are a powerful call to action. We cannot wait for AI developers to “figure it out” on their own when it comes to disability representation in AI. The solution lies in proactive, intentional collaboration with the disability community.

My Strong Suggestion: The disability community must be at the forefront of training AI models to appropriately represent people with disabilities. This isn’t just about providing more data; it’s about providing the right data, infused with lived experience and expert knowledge. This kind of nuanced understanding is what allows AI to move beyond stereotypes and towards genuine connection – for example, a recent guest on my podcast, The Water Prairie Chronicles, created the first AI friend that is a stuffed animal, showing the incredible potential for AI to foster companionship and understanding when developed thoughtfully. (You can listen to that fascinating episode here).

Here’s how we can make a difference:

- Curate and Annotate Data: Actively participate in creating diverse, accurate, and contextually rich datasets that include a wide spectrum of disabilities, mobility aids, and authentic daily life scenarios. This means photos, videos, and descriptions of real people with disabilities living their lives, captured and annotated with the input of disabled individuals themselves.

- Consultation and Collaboration: AI companies must engage disabled individuals, disability organizations, and accessibility experts throughout the entire AI development lifecycle – from conceptualization and data collection to model training, evaluation, and deployment.

- Define “Success”: The disability community should define what “accurate” and “respectful” representation looks like for AI, moving beyond purely aesthetic considerations to functional and contextual understanding.

- “Red Teaming” for Bias: Disabled users and experts can actively “red team” (test for vulnerabilities) AI models specifically for disability bias, identifying problematic outputs and providing feedback for correction.

- Advocate for Inclusive AI Ethics: Support policies and ethical guidelines that mandate diverse representation and accessibility as core principles in AI development, not just an afterthought.

This Disability Pride Month, let’s acknowledge AI’s current blind spots and commit to illuminating them. By actively participating in shaping the future of AI, the disability community can ensure that this powerful technology reflects the true diversity of humanity, fostering a more inclusive and accurately represented world.

What are your thoughts? Have you encountered similar issues with AI image generators? How do you think the disability community can best engage with AI development? Share your experiences and ideas in the comments below!

Related Content:

Episode #131: The Unseen Bias: What AI Image Generators Are Hiding About Disability

#DisabilityPrideMonth #AIbias #InclusiveAI #Accessibility #TechForGood #DisabilityRepresentation #AIethics #July2025 #WeBelongHere

FAQ (Frequently Asked Questions)

Q1: What is the main problem with AI image generators and disability representation?

AI image generators frequently misrepresent people with disabilities and their mobility aids. They often generate stereotypical images, fail to depict mobility aids accurately or functionally, and can even create anatomical distortions, showing a lack of understanding of the lived experience of disabled individuals.

Q2: Which AI image creators did you test for this experiment?

I tested Artistly.ai, Grok on X, Canva, ChatGPT 4o (omni), Gemini 2.5, Leonardo.AI, and Deepai.org.

Q3: Why is accurate AI representation of disability important, especially during Disability Pride Month?

Accurate representation is crucial for visibility, acceptance, and combating stereotypes. During Disability Pride Month, we celebrate disability as a natural part of human diversity. When AI fails to represent disabled individuals accurately or respectfully, it undermines efforts for inclusion and perpetuates harmful biases.

Q4: How did AI perform when asked to create an image of a blind person with a white cane or a guide dog?

AI models consistently struggled with these prompts. They often depicted white canes incorrectly (e.g., as walking sticks, swords, or with blindfolds on the user) and failed to generate proper guide dog harnesses with rigid handles, instead showing standard leashes or pet harnesses.

Q5: Did AI struggle with depicting wheelchairs or crutches accurately?

Yes, for wheelchairs, some platforms produced odd anatomical errors or incorrect chair designs. For crutches, AI frequently generated the wrong type of crutches (e.g., underarm instead of forearm) and struggled with realistic orthopedic braces (AFOs), often making them look like ski boots or random bands.

Q6: What is the proposed solution to improve AI’s representation of disability?

The primary solution is for the disability community to be directly involved in training AI models. This includes curating diverse and accurate training data, consulting with AI developers, and defining what respectful and authentic representation truly means to ensure AI reflects the full spectrum of human experience.